Hi, my name is Mauro, I study computer science at 42school, Paris.

I started programming in 2019 to make games and robots.

While I do love video games, I want to do something more usefull.

This is why, after discovering the precious plastic comunnity, I decided to orient myself toward artificial intelligence.

email: mauroabidal@yahoo.fr

discord: Kantic

Artificial Intelligence Projects

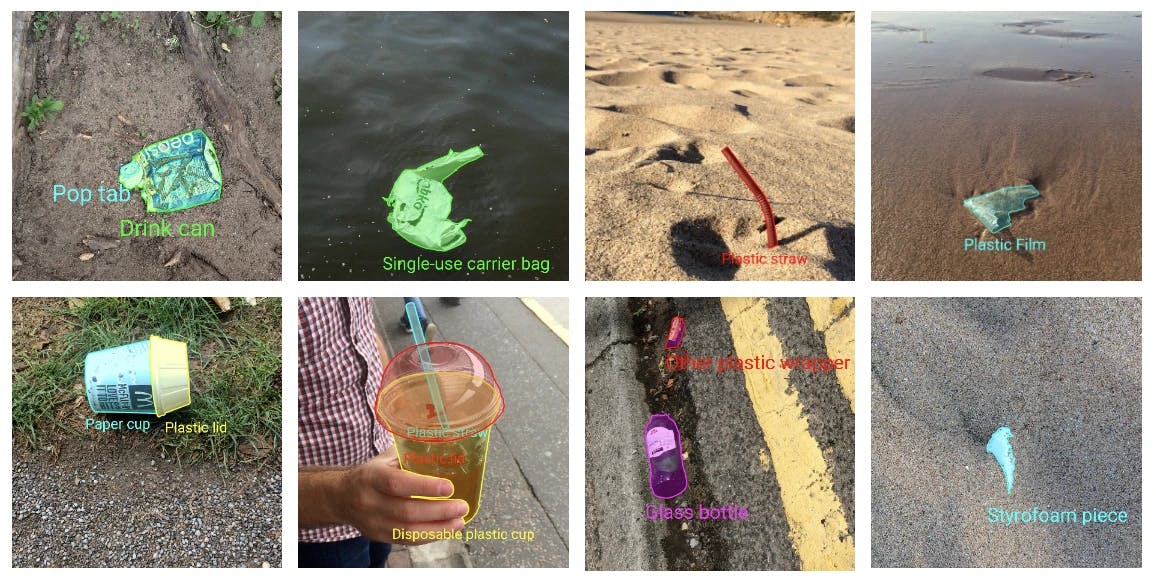

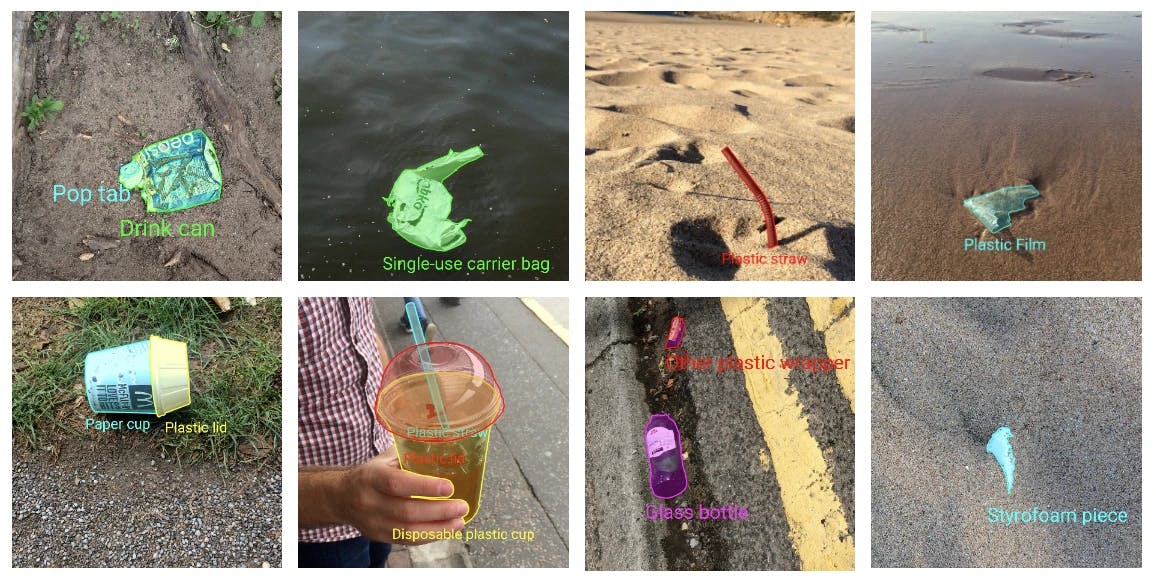

Trash Object Localization on TACO Dataset

TACO is a growing image dataset of waste in the wild.

It contains images of litter taken under diverse environments: woods, roads and beaches.

These images are manually labeled and segmented according to a hierarchical taxonomy to train and evaluate object detection algorithms.

Currently, images are hosted on Flickr and we have a server that is collecting more images and annotations @ tacodataset.org

An ongoing toy project to learn how to train a computer vision model and curate a computer vision dataset.

GitHub: https://github.com/MauroAbidalCarrer/DL_waste_computer_vision/tree/main

TACO is a dataset of annotated images of multiple types of trash objects.

Participation in an Open Source Project

I have participated in the local-code-interpreter project.

It is a code interpreter project in which I added the functionality to serialize (and deserialize) the conversation in a notebook.

I also added "conversation slicing", that is, omitting parts of the conversation when feeding it to the LLM.

This is to maintain the conversation size within the LLM context window and reduce the cost of the LLM.

GitHub: https://github.com/MrGreyfun/Local-Code-Interpreter

Serialization PR: https://github.com/MrGreyfun/Local-Code-Interpreter/pull/24

Conversation Slicing PR: https://github.com/MrGreyfun/Local-Code-Interpreter/pull/32

Deserialization PR (still open): https://github.com/MrGreyfun/Local-Code-Interpreter/pull/30

Motor Imagery School Project

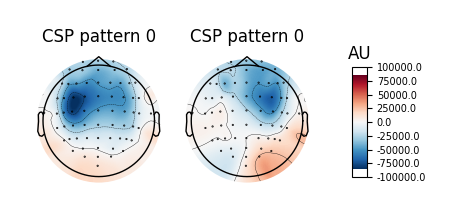

This small school project is an introduction to scikit-learn, MNE (a Python package to process neuroimaging data), and statistics.

In this project, we are tasked with implementing a dimensionality reduction algorithm.

I spent a lot of time trying to implement the most commonly used CSP algorithm. While I couldn't implement it from the ground up, I was able to implement the PCA algorithm and transform a CSP implementation I found online to make it fit in the scikit-learn pipeline.

GitHub: https://github.com/MauroAbidalCarrer/total-perspective-vortex

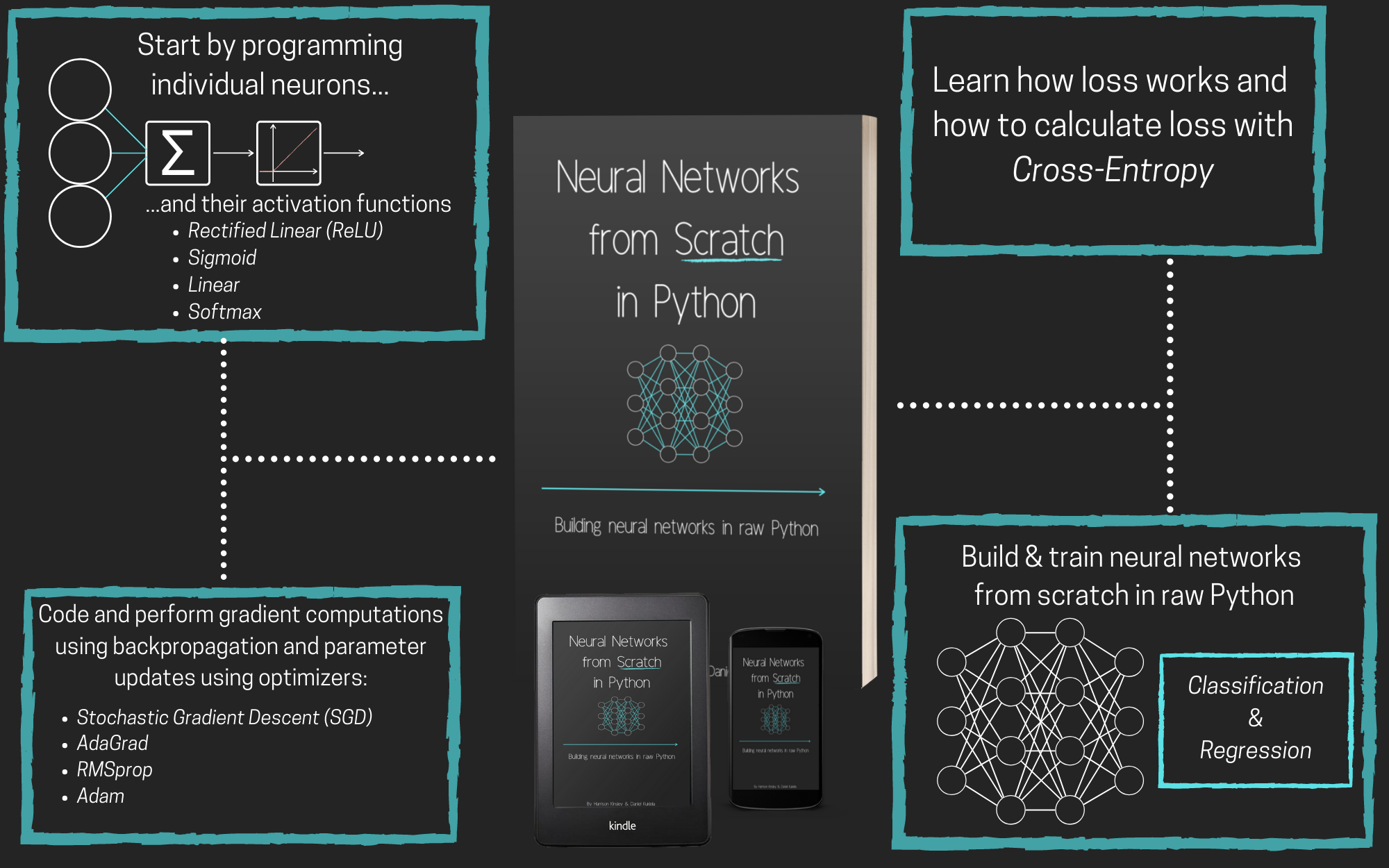

Neural Networks From Sratch book

During the summer I followed the NNFS book.

It taugh me how to use python, numpy and how fully connected networks and the most common optimizers (like Adam) work at the lowest level.

This was my first python project so the code is a bit messy...

github:https://github.com/MauroAbidalCarrer/neuralNetworksFromScratch